When tasked with upgrading our long-neglected ‘intranet’ last year my first job was to work out just how much data was out there and what needed to be upgraded.

The masterpage had been butchered some time in the past so most of the pages were missing navigation, making it hard to follow sites down the hierarchy. And what a hierarchy! The architects of the original instance apparently worked out that you could have more than one document library per site, or that you could create folders. The result is the typical site sprawl. To add to the fun, some sites were created using some custom template that no longer works and others didn’t have any files at all in them.

In order to create a list of all the sites and how they relate, you can use a PowerShell script:

[code lang=”PowerShell”]

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SharePoint") > $null

function global:Get-SPSite($url){

return new-Object Microsoft.SharePoint.SPSite($url)

}

function Enumerate-Sites($website){

foreach($web in $website.getsubwebsforcurrentuser()){

[string]$web.ID+";"+[string]$web.ParentWeb.ID+";"+$web.title+";"+$web.url

Enumerate-Sites $web

$web.dispose()

}

$website.dispose()

}

#Change these variables to your site URL and list name

$siteColletion = Get-SPSite(http://example.org)

$start = $siteColletion.rootweb

Enumerate-Sites $start

$start.Dispose()

[/code]

It’s actually pretty simple since we take advantage of recursion. Pretty much a matter of getting a handle to the site collection, outputting its GUID and parent site GUID and then the human-readable title and URL. They you do the same for that site, and so on down the branch.

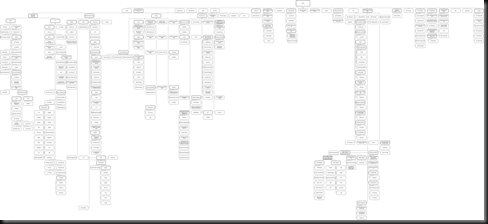

The reason we’re outputting the GUIDs is that we can use them to relate each site to the rest. The script outputs straight to the console but if you pipe the output to a text file you can use it for the input to a org-chart diagram in visio. The results are terrifying:

Each node on that diagram is a site that may have one, or thousands of documents. Or nothing. Or the site just may not work. As it turned out, when asked to prioritize material for migration, the stakeholders decided it would be easier just to move the day-to-day stuff and leave the old farm going as an ‘archive’. Nothing like asking a client to do work to get your scope reduced!

As a final note on this script, it is recursive so could (according to my first-year Comp. Sci. lecturer) theoretically balloon out of control and consume all the resources in the visible universe, before collapsing into an ultradense back hole and crashing your server in the process, but you’d have to have a very elaborate tree structure for that to happen, in which case you’d probably want to partition it off into separate site collections anyway.