As promised I’m writing a garden update. I don’t think that anyone would accuse gardening of being hip or trendy but I do enjoy wandering about, trance-like, pulling weeds, watering, clipping stray branches or otherwise tending. Anyhow, here’s what’s been going on.

First up, here’s a 360 of the whole place:

You’re supposed to be able to scroll around that, like the inside of a big sphere, but I can’t be bothered looking for the plugin. I made it with my new fancy-pants Google Nexus phone, which we’ve finally managed to procure at work.

You’re supposed to be able to scroll around that, like the inside of a big sphere, but I can’t be bothered looking for the plugin. I made it with my new fancy-pants Google Nexus phone, which we’ve finally managed to procure at work.

As you can see, the ‘grass’ is making a very slow colonization of the dustbowl that resulted after my landlord dumped a pile of builder’s rubble soil on the lawn. This could be helped along with some grass seed but such things are hard to acquire in Bangkok, where people ar emore enthusiastic about concrete carparks and potted plants on their open spaces. As it is, I’ve been scattering birdseed, which grows into very convincing grass, but the birds don’t seem to have got the memo and keep eating it.

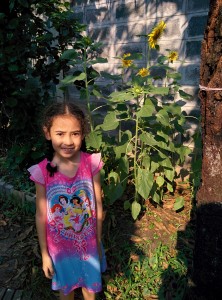

Regular readers will be stunned to see how rapidly the corn has ascended. I co opted a small child for this photo to give a sense of scale, but I can assure you that the corn is taller than me. Still, it’s been two months so there should be a bit of action. There’s even a few ears developing, which has my youngest quite excited.

The carrots aren’t as impressive, they’re not getting much sun. We pulled up one of these little plants today and there was only a little root, which was not orange. I expect we won’t see much until the corn is done and opens up this bit of garden bed, although I did prune the roseapple tree of it’s lower, eye-gouging branches today so perhaps we’ll see some more robust root vegetables soon.

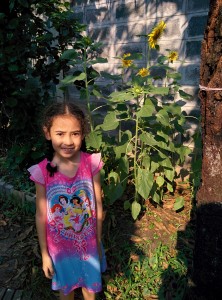

The sunflowers have bloomed.

They’re being made to face the wall as apparently that’s where the reflected light is coming from.

They’re being made to face the wall as apparently that’s where the reflected light is coming from.

Again, in this shot a young child of indeterminate stature gives scale.

Again, in this shot a young child of indeterminate stature gives scale.

You may recall that the last column ended on a bit of a cliffhanger. Will Dan’s herb plot give bounty? Or will it, like most things I’ve put in the garden, stubbornly stay beneath the soil and only host weeds and spiders? As you can see from the photo I’ve got a respectable bed of coriander and some other unidentifiable yet aromatic herbs.

You may recall that the last column ended on a bit of a cliffhanger. Will Dan’s herb plot give bounty? Or will it, like most things I’ve put in the garden, stubbornly stay beneath the soil and only host weeds and spiders? As you can see from the photo I’ve got a respectable bed of coriander and some other unidentifiable yet aromatic herbs.

It must be a good time of year as I only put in this bok-choi and broccoli a week or two ago and it’s already going mental. I put this in as a bit of a punt as I expect the lot to get eaten by caterpillars.

It must be a good time of year as I only put in this bok-choi and broccoli a week or two ago and it’s already going mental. I put this in as a bit of a punt as I expect the lot to get eaten by caterpillars.

Here’s the Kooper Kids digging up the lawn and playing while mocking their father’s agricultural follies.

But they’d better watch it because I’ve got this fucker. The sword that appeared in last month’s post turned out to be rubbish. I had the grinding attachment on the drill and many hours with a whetstone but it stubbornly refuses to be anything but a letter opener, and a blunt one at that. Big blades are often made out of old leaf springs. Spring steel is tough, flexible (duh!) and is soft enough to take a good edge. The Banana Splitter was crap stainless that could apparently survive reentry, it is so hard. And the bottle opener on the debole barely works.

But they’d better watch it because I’ve got this fucker. The sword that appeared in last month’s post turned out to be rubbish. I had the grinding attachment on the drill and many hours with a whetstone but it stubbornly refuses to be anything but a letter opener, and a blunt one at that. Big blades are often made out of old leaf springs. Spring steel is tough, flexible (duh!) and is soft enough to take a good edge. The Banana Splitter was crap stainless that could apparently survive reentry, it is so hard. And the bottle opener on the debole barely works.

Anyhow, I bought this Hong-Kong arm-pruner off the street from a purveyor of the sharp and pointy (really off the street, from a little pushcart stuffed with blades) and it’s crazy sharp with a mirror-finish, all the better to reflect sunlight into an enemy’s eyes as you circle him (or her) in one-on-one combat. It’s a little top-heavy, which I don’t really like, and the hilt is too short for my hand, which is a shame as that makes it a little uncomfortable to use for long. Still, I’m able to cut clean through tree branches twice the width of my thumb if I get a good swing, which is simultaneously handy and terrifying — going to have to be careful brandishing this about.

So that’s it for this episode. Look out for next update where I’ll be beating off triffids with a halberd or something. I leave you with a pic of Mrs Rabbit enjoying her hutch.